The objective of this lab is to become familiar with the software program Pix4D. This software is very easy to use and is currently the premier software relating to processing UAS data, which is mainly used for constructing point clouds. Pix4d uses drone imagery to create georeferenced maps and models. For this lab, Pix4D was used to calculate volumes, create animations, and create a map using the data from this software.

For Pix4D to process imagery, the overlap needs to be set at a 75% frontal overlap. To ensure the consistency of the imagery, the user should take the images in a uniform grid pattern of the study area. Also, there should be at least a 85% frontal overlap when the user is flying over sand, snow, or uniform fields. A unique feature of Pix4d is the Rapid Check which runs inside the software. This feature is an alternative initial processing system where accuracy is traded for speed. It has fairly low accuracy because it processes faster in an effort to quickly determine whether sufficient coverage was obtained.

Another unique feature of Pix4D is that it can process multiple flights at one time. However, it is important to make sure that the images have enough overlap. The heights of the images should be around the same to ensure that the images have the same spatial distribution. Other aspects to take in account for are having the images be taken during the same weather conditions and sun direction. Overlap of images is an important concept for processing multiple flights as well as oblique images.

Also, Pix4D uses GCP's to help with the adjusting of overlapping images, but they are not necessary with this software. Although, there are certain scenarios where using GCP's are actually recommended but they don't apply to this lab. When using this software, quality reports are displayed after each step of processing to inform the user whether a task has failed or succeeded.

Methods:

The first task completed using the software was calculating volumes of several piles on the mine landscape data provided by the professor of this course, Dr. Hupy. Using the volume tool, the boundary of the desired object (in this case it was sand piles) is traced by connecting points surrounding the object by left clicking the mouse and then closing the points by right clicking the mouse. Then, click the Compute button to receive the volume measurement of that specific pile. This process was done to three different piles on the landscape. The results from the volume measurements (Figure 1) are shown below as well as images of the piles (Figure 2).

|

| Figure 1: Volume Measurements of Sand Piles |

|

| Figure 2: Image of Sand Piles That Were Used For Calculating Volumes |

Figure 3: Video Animation of 2 of 3 Volume Piles

Figure 4: Video Animation of 1 of 3 Volume Piles

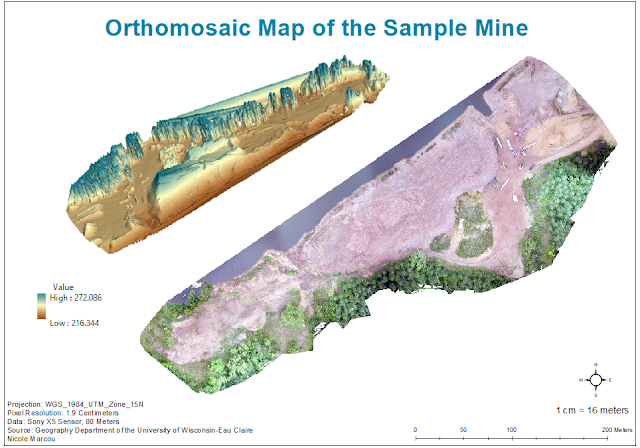

The last task completed was making two different maps in ArcMap using the data from Pix4D. One map shows an orthomosaic image (Figure 5) of the sample mine landscape and the second map shows the digital surface model (DSM) (Figure 6) of the mine. Both maps also include an image of the mine from ArcScene that shows the elevated surface at a tilt for another reference. The hillshade feature in was also used to try and represent some of the topography of the mine landscape. Each map shows the mine in different ways which can be useful to the viewer when trying to analyze the landscape. The orthomosaic image shows the natural landscape and the DSM shows the elevation where red areas are higher in elevation that areas of green. The side image from ArcScene also helps to show the elevation where bluer areas are higher in elevation and browner areas are lower. However, this can be slightly misleading because the height of the trees were included in the elevation heights.

|

| Figure 5: Orthomosaic Map of the Sample Mine |

|

| Figure 6: Digital Surface Model of the Sample Mine |

Pix4D is a user friendly software that provides multiple features in analyzing data. Although this lab was merely scratching the surface at what this software can offer, the tasks completed in this lab were very interesting and easy to accomplish. Pix4D is unique in the fact that it can create video animations of sample areas which can help individuals when analyzing data. Overall, this lab offered great practice in using Pix4D. It was nice to be able to used different features of Pix4D, ArcMap, and ArcScene together to create one map. This lab provided a strong basis of knowledge of the software to use in future labs.

Sources:

https://pix4d.com/